This project aims to visualize class similarities in large-scale classifiers such as deep neural networks. These similarities usually define a hierarchical structure over the classes. Understanding this structure helps explain the classifier's behaviour and its errors because it largely influences the features the classifier learns.

Explore the classification structure in MIT Places365, classified using ResNet-18 as implemented in PyTorch.

View Jupyter Notebook

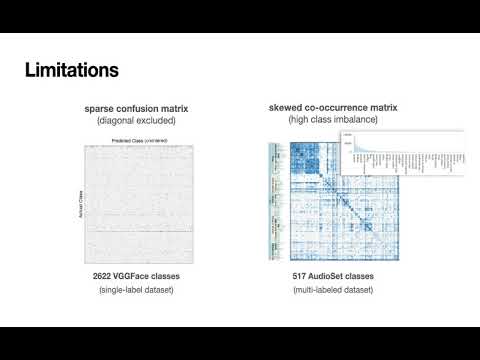

Below are class similarity matrices computed for two large-scale classifiers.

Each row / column is one of 527 classes (audio events) from Google's AudioSet. The dataset is multi-labeled with high class imbalance. The major similarity groups exposed by visualizing the matrix are music, inside, organism, and outside. This suggests that the CNN develops shared features that characterize each of these groups.

Each row / column is one of 2622 classes (celebrities) from Oxford's VGGFace Dataset. Male vs. female celebrities define the major two blocks in the matrix. Prominent subgroups in these blocks are based on wrinkles and hair / skin color. Chromaticity defines two of these sub-groups (split marked in red), and indicates that the CNN uses unreliable features to recognize the corresponding celebrities.

Here is an article describing how we compute class similarity and how we visualize the class similarity matrix, with examples on five datasets. The article is accepted to the WHI Workshop @ICML 2020.

Here is a poster summarizing the work, presented at the XC Workshop @ICML 2020.

You can cite the article as follows:

@inproceedings{whi2020visualizing,

title={Visualizing Classification Structure of Large-Scale Classifiers},

author={Bilal Alsallakh and Zhixin Yan and Shabnam Ghaffarzadegan and Zeng Dai and Ren, Liu},

booktitle={ICML Workshop on Human Interpretability in Machine Learning (WHI)},

year={2020},

}

If you have any suggestions or feedback, we will be happy to hear from you!