-

Notifications

You must be signed in to change notification settings - Fork 106

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Strange values after converting 3D joint positions from world to camera coordinates #29

Comments

|

@gulvarol Hi Gül, I am still confused about the values of 3D joints data:

|

|

@GianMassimiani were you able to solve the problem? |

Hi, thanks for the great dataset! I used some of the code you provided (e.g. for retrieving the camera extrinsic matrix) to convert 3D joint positions from world to camera coordinates. However, the values of joint positions in camera coordinates seem a bit strange to me. Here is the code:

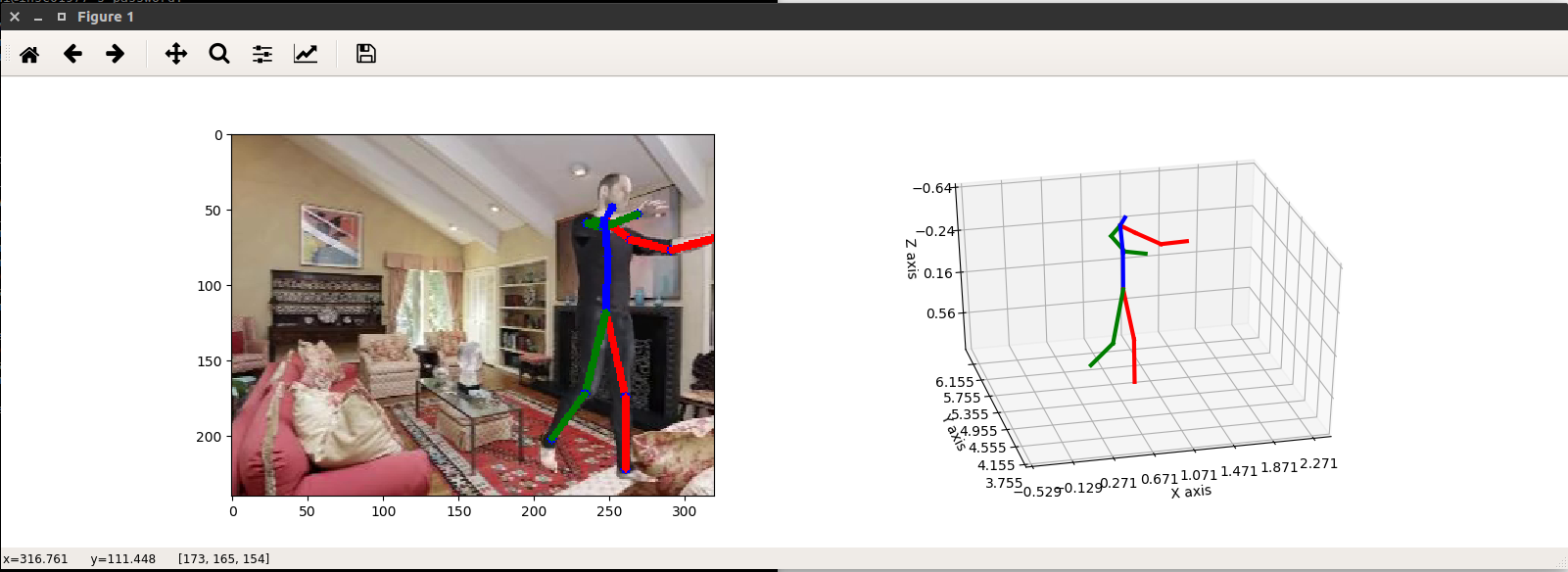

When plotting the joints in 3D I get strange depth values (see Y axis in the figure below). For example, in the image below the subject appears very close to the camera, however it's position on the Y axis (computed with the above code) is about 6 meters, which seems quite unrealistic to me:

Do you have any idea why this is happening? Thanks

The text was updated successfully, but these errors were encountered: