-

Notifications

You must be signed in to change notification settings - Fork 695

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

📝 RLFH UX Skeleton #31

Conversation

|

if we were to send the feedback to the model, do we know if it would have any impact on the responses? without doing this, I'm not sure that this change aligns with our recently updated first principles given that it doesn't seem like we're currently completing the loop on the AI usage pattern. |

No, SK doesn't hook up to any feedback loop with the models, so this would be up to the developer to develop and expose their own endpoints to train their models. This was a big push from John to showcase of the first principles of LLMs in general |

ok yeah that makes sense. thanks for sharing this, video is very insightful.

I think this will help a lot, especially if we can emphasize some of what you said here in that they will need to train their own models. |

Is there a simple implementation we can bring in? If we have buttons on the UI that are not wired up we are going to end up with people wondering why they aren't working. Simple could be using them as few shot examples in conjunction with the chat message history? Could add a state to each message? |

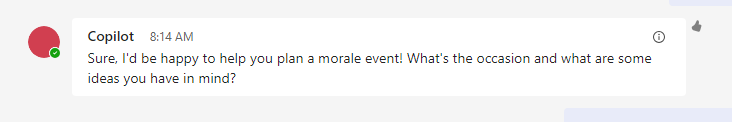

### Motivation and Context <!-- Thank you for your contribution to the copilot-chat repo! Please help reviewers and future users, providing the following information: 1. Why is this change required? 2. What problem does it solve? 3. What scenario does it contribute to? 4. If it fixes an open issue, please link to the issue here. --> Added RLFH UI to demonstrate users can easily add this capability to their chatbot if they wanted. ### Description <!-- Describe your changes, the overall approach, the underlying design. These notes will help understanding how your code works. Thanks! --> > This feature is for demonstration purposes only. We don't actually hook up to the server to store the human feedback or send it to the model. Details: - RLFH actions will only show on the most recent chat message in which the author is `bot`. - If user takes action, icon will be rendered to reflect action. - Data is only stored in frontend state. If app refreshes, all RLFH across all chats will be reset. Actions on chat message:  Once user takes actions:  Future work - Add ability to turn of RLHF in settings dialog + add help link to docs there ### Contribution Checklist <!-- Before submitting this PR, please make sure: --> - [x] The code builds clean without any errors or warnings - [x] The PR follows the [Contribution Guidelines](https:/microsoft/copilot-chat/blob/main/CONTRIBUTING.md) and the [pre-submission formatting script](https:/microsoft/copilot-chat/blob/main/CONTRIBUTING.md#development-scripts) raises no violations ~~- [ ] All unit tests pass, and I have added new tests where possible~~ - [x] I didn't break anyone 😄

Motivation and Context

Added RLFH UI to demonstrate users can easily add this capability to their chatbot if they wanted.

Description

Details:

bot.Actions on chat message:

Once user takes actions:

Future work

Contribution Checklist

- [ ] All unit tests pass, and I have added new tests where possible